Paid search marketers look to technology to provide them with a competitive advantage.

AdWords is host to a range of increasingly sophisticated features, but there are also numerous third-party tools that add extra insight. Below, we review some of the essential tools to achieve PPC success.

The paid search industry is set to develop significantly through 2018, both in its array of options for advertisers and in its level of sophistication as a marketing channel. The pace of innovation is only accelerating, and technology is freeing search specialists to spend more time on strategy, rather than repetitive tasks.

Google continues to add new machine learning algorithms to AdWords that improve the efficacy of paid search efforts, which is undoubtedly a welcome development. This technology ultimately becomes something of an equalizer, however, given that everyone has access to these same tools.

It is at the intersection of people and technology that brands can thrive in PPC marketing. Better training and more enlightened strategy can help get the most out of Google’s AdWords and AdWords Editor, but there are further tools that can add a competitive edge.

The below are technologies that can save time, uncover insights, add scale to data analysis, or a combination of all three.

Keyword research tools

Identifying the right keywords to add to your paid search account is, of course, a fundamental component of a successful campaign.

Google will suggest a number of relevant queries within the Keyword Planner tool, but it does have some inherent limitations. The list of keywords provided within this tool is far from comprehensive and, given the potential rewards on offer, sophisticated marketers would be well advised to look for a third-party solution.

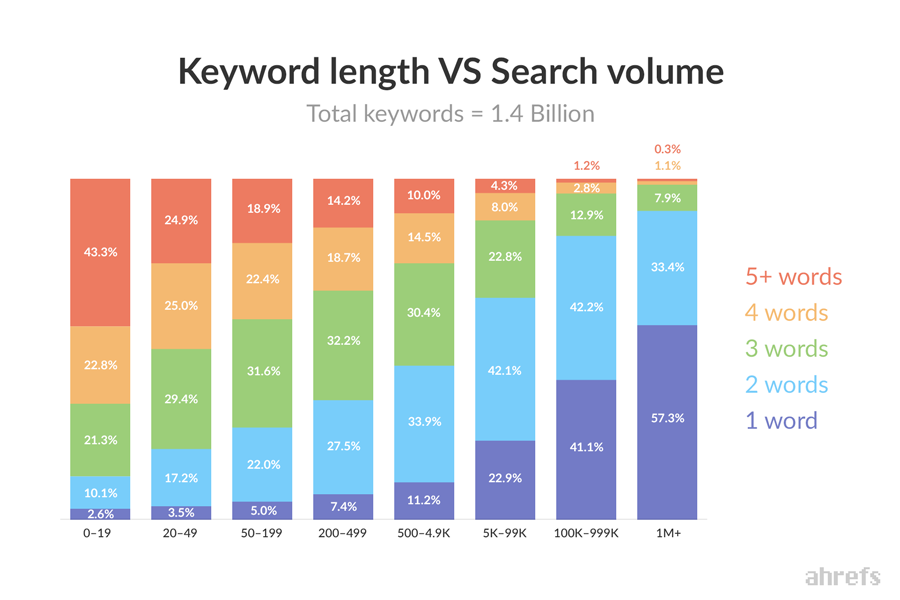

A recent post by Wil Reynolds at Seer Interactive brought to light just how important it is to build an extensive list of target keywords, as consumers are searching in multifaceted ways, across devices and territories. According to Ahrefs, 85% of all searches contain three or more words and although the shorter keywords tend to have higher search volumes, the long tail contains a huge amount of value too.

Add in growing trends like the adoption of voice search and the picture becomes more complex still. In essence, it is necessary to research beyond Google Keyword Planner to uncover these opportunities.

Keywordtool.io takes an initial keyword suggestion as its stimulus and uses this to come up up to 750 suggested queries to target. This is achieved in part through the use of Google Autocomplete to pull in a range of related terms that customers typically search for. A Pro licence for this tool starts at $48 per month.

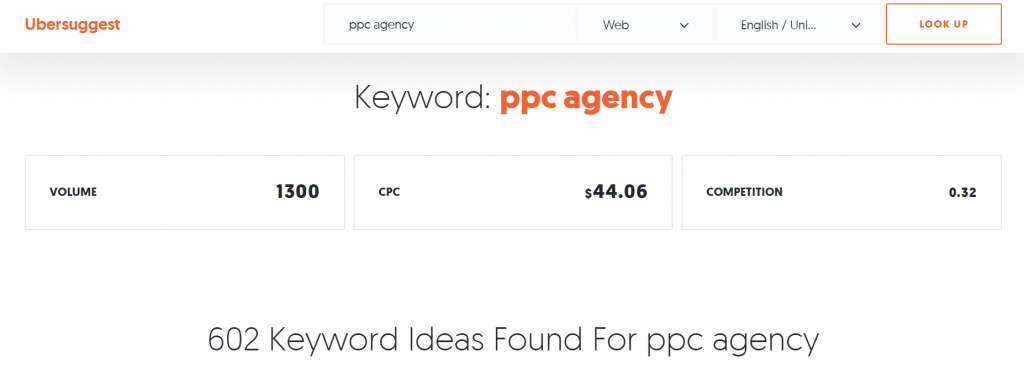

Ubersuggest is another long-standing keyword tool that search marketers use to find new, sometimes unexpected, opportunities to communicate with customers via search. It groups together suggested keywords based on their lexical similarity and they can be exported to Excel.

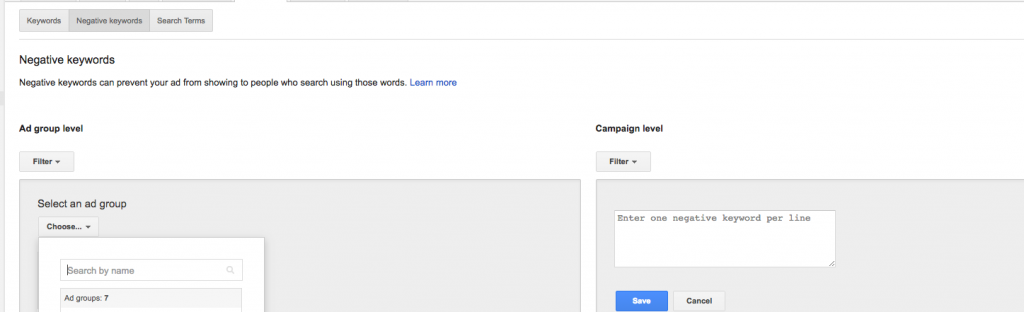

This tool also allows marketers to add in negative keywords to increase the relevance of their results.

We have written about the benefits of Google Trends for SEO, but the same logic applies to PPC. Google Trends can be a fantastic resource for paid search, as it allows marketers to identify peaks in demand. This insight can be used to target terms as their popularity rises, allowing brands to attract clicks for a lower cost.

Google Trends has been updated recently and includes a host of new features, so it is worth revisiting for marketers that may not have found it robust enough in its past iterations.

Answer the Public is another great tool for understanding longer, informational queries that relate to a brand’s products or services. It creates a visual representation of the most common questions related to a head term, such as ‘flights to paris’ in the example below:

As the role of paid search evolves into more of a full-funnel channel that covers informational queries as well as transactional terms, tools like this one will prove invaluable. The insights it reveals can be used to tailor ad copy, and the list of questions can be exported and uploaded to AdWords to see if there is a sizeable opportunity to target these questions directly.

For marketers that want to investigate linguistic trends within their keyword set, it’s a great idea to use an Ngram viewer. There are plenty of options available, but this tool is free and effective.

Competitor analysis tools

AdWords Auction Insights is an essential tool for competitor analysis, as it reveals the impression share for different sites across keyword sets, along with average positions and the rate of overlap between rival sites.

This should be viewed as the starting point for competitor analysis, however. There are other technologies that provide a wider range of metrics for this task, including Spyfu and SEMrush.

Spyfu’s AdWords History provides a very helpful view of competitor strategies over time. This reveals what their ad strategies have been, but also how frequently they are changed. As such, it is a helpful blend of qualitative and quantitative research that shows not just how brands are positioning their offering, but also how much they have been willing to pay to get it in front of their audience.

A basic licence for Spyfu starts at $33 per month.

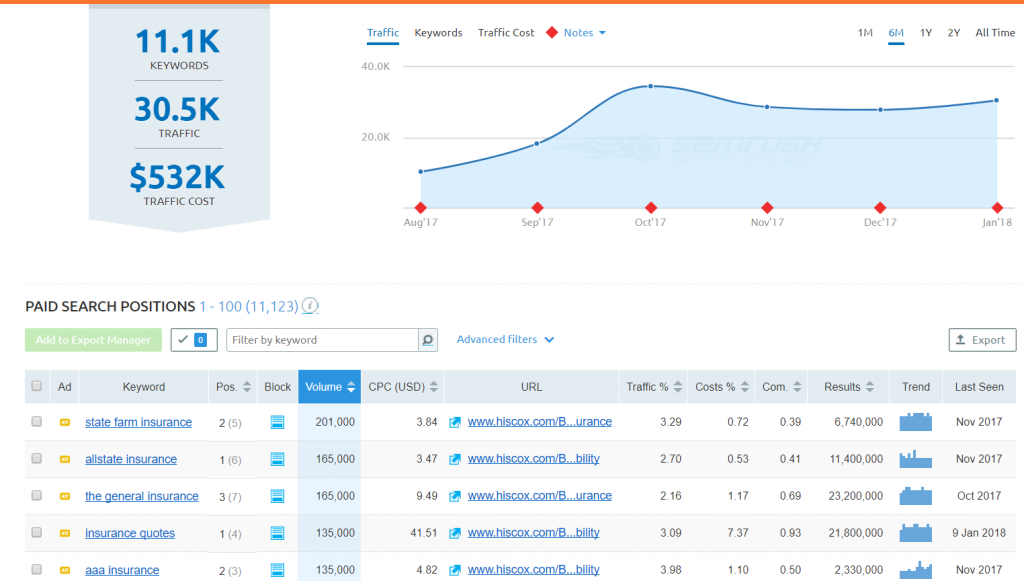

SEMrush is a great tool for competitor analysis, both for paid search and its organic counterpart. This software shows the keywords that a domain ranks against for paid search and calculates the estimated traffic the site has received as a result.

The Product Listing Ads features are particularly useful, as they provide insight into a competitor’s best-performing ads and their core areas of focus for Google Shopping.

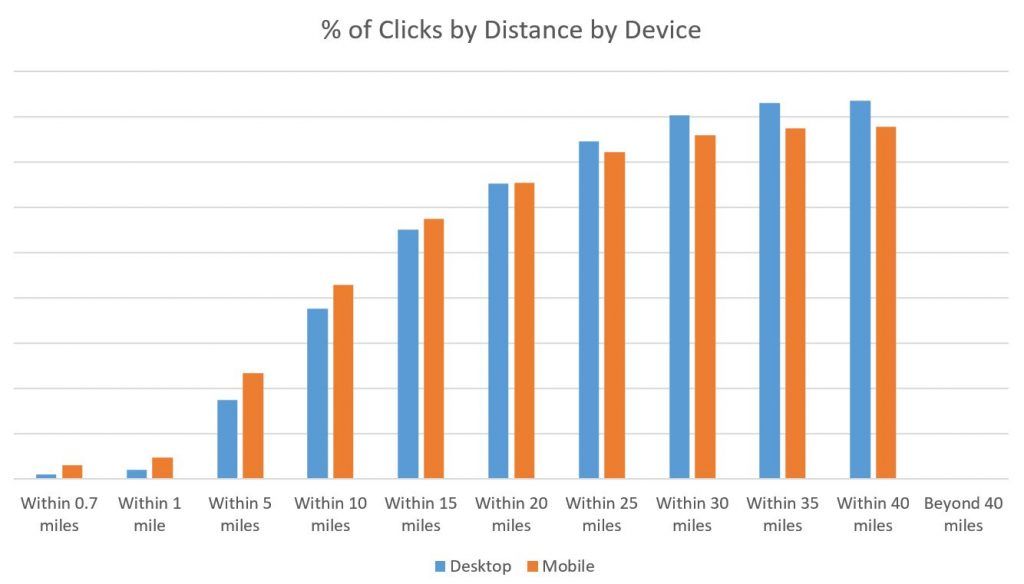

It is also easy to compare desktop data to mobile data through SEMrush, a feature that has become increasingly powerful as the shift towards mobile traffic continues.

A licence for SEMrush starts at $99.95 per month.

Used in tandem with AdWords Auction Insights, these tools create a fuller picture of competitor activities.

Landing page optimization tools

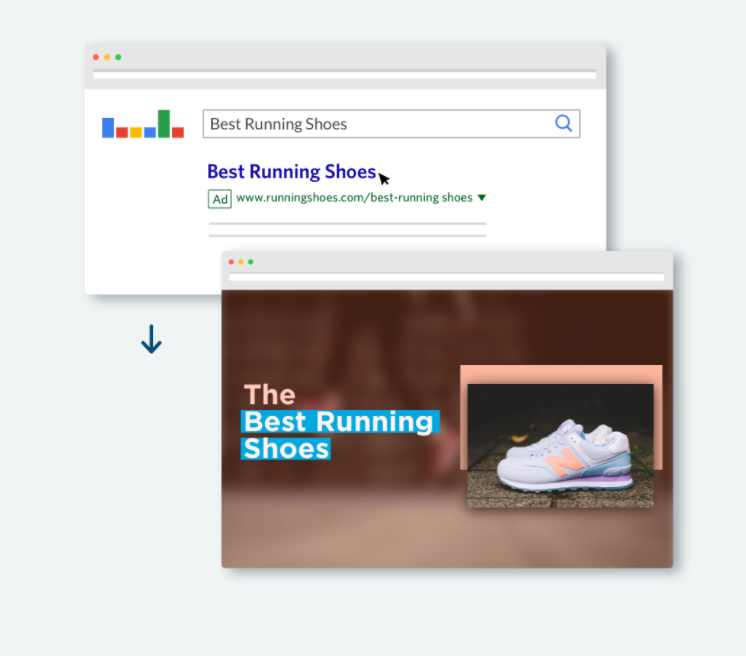

It is essential to optimize the full search experience, from ad copy and keyword targeting, right through to conversion. It is therefore the responsibility of PPC managers to ensure that the on-site experience matches up to the consumer’s expectations.

A variety of tools can help achieve this aim, requiring minimal changes to a page’s source code to run split tests on landing page content and layout. In fact, most of these require no coding skills and allow PPC marketers to make changes that affect only their channel’s customers. The main site experience remains untouched, but paid search visitors will see a tailored landing page based on their intent.

Unbounce has over 100 responsive templates and the dynamic keyword insertion feature is incredibly useful. The latter adapts the content on a page based on the ad a user clicked, helping to tie together the user journey based on user expectations.

Brand monitoring tools

Branded keywords should be a consistent revenue driver for any company. Although there is no room to be complacent, even when people are already searching for your brand’s name, these queries tend to provide a sustainable and cost-effective source of PPC traffic.

Unless, of course, the competition tries to steal some of that traffic. Google does have some legislation to protect brands, but this has proved insufficient to stop companies bidding on their rivals’ brand terms. When this does occur, it also drives up the cost-per-click for branded keywords.

Brandverity provides some further protection for advertisers through automated alerts that are triggered when a competitor encroaches on their branded terms.

This coverage includes Shopping ads, mobile apps, and global search engines.

Custom AdWords scripts

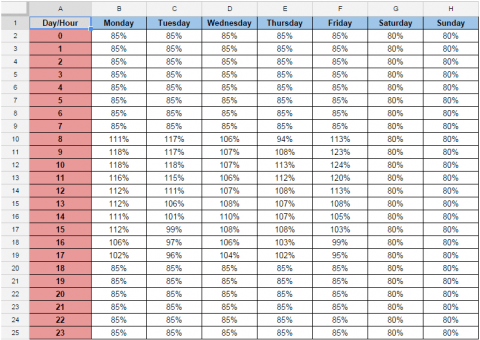

Although not a specific tool, it is worth mentioning the additional benefits that custom scripts can bring to AdWords performance. These scripts provide extra functionality for everything from more flexible bidding schedules, to stock price-based bid adjustments and third-party data integrations.

This fantastic list from Koozai is a comprehensive resource, as is this one from Free Adwords Scripts. PPC agency Brainlabs also provides a useful list of scripts on their website that is typically updated with a new addition every few months.

Using the tools listed above can add an extra dimension to PPC campaigns and lead to the essential competitive edge that drives growth. As the industry continues to evolve at a rapid rate, these tools should prove more valuable than ever.

source https://searchenginewatch.com/2018/01/31/the-must-have-tools-for-paid-search-success/