“Hey Siri, what is the cost of an iPad near me?”

In today’s internet, a number of specialist search engines exist to help consumers search for and compare things within a specific niche.

As well as search engines like Google and Bing which crawl the entire web, we have powerful vertical-specific search engines like Skyscanner, Moneysupermarket and Indeed that specialize in surfacing flights, insurance quotes, jobs, and more.

Powerful though web search engines can be, they aren’t capable of delivering the same level of dedicated coverage within a particular industry that vertical search engines are. As a result, many vertical-specific search engines have become go-to destinations for finding a particular type of information – above and beyond even the all-powerful Google.

Yet until recently, one major market remained unsearchable: prices.

If you ask Siri to tell you the cost of an iPad near you, she won’t be able to provide you with an answer, because she doesn’t have the data. Until now, a complete view of prices on the internet has never existed.

Enter Pricesearcher, a search engine that has set out to solve this problem by indexing all of the world’s prices. Pricesearcher provides searchers with detailed information on products, prices, price histories, payment and delivery information, as well as reviews and buyers’ guides to aid in making a purchase decision.

Founder and CEO Samuel Dean calls Pricesearcher “The biggest search engine you’ve never heard of.” Search Engine Watch recently paid a visit to the Pricesearcher offices to find about the story behind the first search engine for prices, the technical challenge of indexing prices, and why the future of search is vertical.

Pricesearcher: The early days

A product specialist by background, Samuel Dean spent 16 years in the world of ecommerce. He previously held a senior role at eBay as Head of Distributed Ecommerce, and has carried out contract work for companies including Powa Technologies, Inviqa and the UK government department UK Trade & Investment (UKTI).

He first began developing the idea for Pricesearcher in 2011, purchasing the domain Pricesearcher.com in the same year. However, it would be some years before Dean began work on Pricesearcher full-time. Instead, he spent the next few years taking advantage of his ecommerce connections to research the market and understand the challenges he might encounter with the project.

“My career in e-commerce was going great, so I spent my time talking to retailers, speaking with advisors – speaking to as many people as possible that I could access,” explains Dean. “I wanted to do this without pressure, so I gave myself the time to formulate the plan whilst juggling contracting and raising my kids.”

More than this, Dean wanted to make sure that he took the time to get Pricesearcher absolutely right. “We knew we had something that could be big,” he says. “And if you’re going to put your name on a vertical, you take responsibility for it.”

Dean describes himself as a “fan of directories”, relating how he used to pore over the Yellow Pages telephone directory as a child. His childhood also provided the inspiration for Pricesearcher in that his family had very little money while he was growing up, and so they needed to make absolutely sure they got the best price for everything.

Dean wanted to build Pricesearcher to be the tool that his family had needed – a way to know the exact cost of products at a glance, and easily find the cheapest option.

“The world of technology is so advanced – we have self-driving cars and rockets to Mars, yet the act of finding a single price for something across all locations is so laborious. Which I think is ridiculous,” he explains.

Despite how long it took to bring Pricesearcher to inception, Dean wasn’t worried that someone else would launch a competitor search engine before him.

“Technically, it’s a huge challenge,” he says – and one that very few people have been willing to tackle.

There is a significant lack of standardization in the ecommerce space, in the way that retailers list their products, the format that they present them in, and even the barcodes that they use. But rather than solve this by implementing strict formatting requirements for retailers to list their products, making them do the hard work of being present on Pricesearcher (as Google and Amazon do), Pricesearcher was more than willing to come to the retailers.

“Our technological goal was to make listing products on Pricesearcher as easy as uploading photos to Facebook,” says Dean.

As a result, most of the early days of Pricesearcher were devoted to solving these technical challenges for retailers, and standardizing everything as much as possible.

In 2014, Dean found his first collaborator to work with him on the project: Raja Akhtar, a PHP developer working on a range of ecommerce projects, who came on board as Pricesearcher’s Head of Web Development.

Dean found Akhtar through the freelance website People Per Hour, and the two began working on Pricesearcher together in their spare time, putting together the first lines of code in 2015. The beta version of Pricesearcher launched the following year.

For the first few years, Pricesearcher operated on a shoestring budget, funded entirely out of Dean’s own pocket. However, this didn’t mean that there was any compromise in quality.

“We had to build it like we had much more funding than we did,” says Dean.

They focused on making the user experience natural, and on building a tool that could process any retailer product feed regardless of format. Dean knew that Pricesearcher had to be the best product it could possibly be in order to be able to compete in the same industry as the likes of Google.

“Google has set the bar for search – you have to be at least as good, or be irrelevant,” he says.

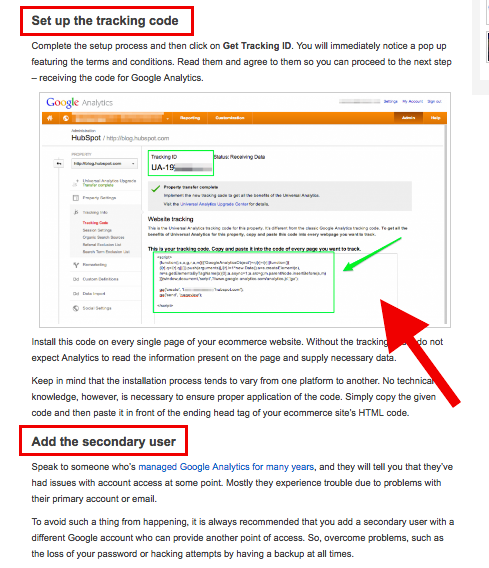

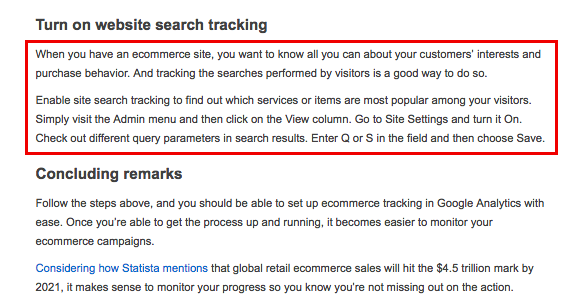

PriceBot and price data

Pricesearcher initially built up its index by directly processing product feeds from retailers. Some early retail partners who joined the search engine in its first year included Amazon, Argos, IKEA, JD Sports, Currys and Mothercare. (As a UK-based search engine, Pricesearcher has primarily focused on indexing UK retailers, but plans to expand more internationally in the near future).

In the early days, indexing products with Pricesearcher was a fairly lengthy process, taking about 5 hours per product feed. Dean and Akhtar knew that they needed to scale things up dramatically, and in 2015 began working with a freelance dev ops engineer, Vlassios Rizopoulos, to do just that.

Rizopoulos’ work sped up the process of indexing a product feed from 5 hours to around half an hour, and then to under a minute. In 2017 Rizopoulos joined the company as its CTO, and in the same year launched Pricesearcher’s search crawler, PriceBot. This opened up a wealth of additional opportunities for Pricesearcher, as the bot was able to crawl any retailers who didn’t come to them directly, and from there, start a conversation.

“We’re open about crawling websites with PriceBot,” says Dean. “Retailers can choose to block the bot if they want to, or submit a feed to us instead.”

For Pricesearcher, product feeds are preferable to crawl data, but PriceBot provides an option for retailers who don’t have the technical resources to submit a product feed, as well as opening up additional business opportunities. PriceBot crawls the web daily to get data, and many retailers have requested that PriceBot crawl them more frequently in order to get the most up-to-date prices.

Between the accelerated processing speed and the additional opportunities opened up by PriceBot, Pricesearcher’s index went from 4 million products in late 2016 to 500 million in August 2017, and now numbers more than 1.1 billion products. Pricesearcher is currently processing 2,500 UK retailers through PriceBot, and another 4,000 using product feeds.

All of this gives Pricesearcher access to more pricing data than has ever been accumulated in one place – Dean is proud to state that Pricesearcher has even more data at its disposal than eBay. The data set is unique, as no-one else has set out to accumulate this kind of data about pricing, and the possible insights and applications are endless.

At Brighton SEO in September 2017, Dean and Rizopoulos gave a presentation entitled, ‘What we have learnt from indexing over half a billion products’, presenting data insights from Pricesearcher’s initial 500 million product listings.

The insights are fascinating for both retailers and consumers: for example, Pricesearcher found that the average length of a product title was 48 characters (including spaces), with product descriptions averaging 522 characters, or 90 words.

Less than half of the products indexed – 44.9% – included shipping costs as an additional field, and two-fifths of products (40.2%) did not provide dimensions such as size and color.

Between December 2016 and September 2017, Pricesearcher also recorded 4 billion price changes globally, with the UK ranking top as the country with the most price changes – one every six days.

It isn’t just Pricesearcher who have visibility over this data – users of the search engine can benefit from it, too. On February 2nd, Pricesearcher launched a new beta feed which displays a pricing history graph next to each product.

This allows consumers to see exactly what the price of a product has been throughout its history – every rise, every discount – and use this to make a judgement about when the best time is to buy.

“The product history data levels the playing field for retailers,” explains Dean. “Retailers want their customers to know when they have a sale on. This way, any retailer who offers a good price can let consumers know about it – not just the big names.

“And again, no-one else has this kind of data.”

As well as giving visibility over pricing changes and history, Pricesearcher provides several other useful functions for shoppers, including the ability to filter by whether a seller accepts PayPal, delivery information and a returns link.

This is, of course, if retailers make this information available to be featured on Pricesearcher. The data from Pricesearcher’s initial 500 million products shed light on many areas where crucial information was missing from a product listing, which can negatively impact a retailer’s visibility on the search engine.

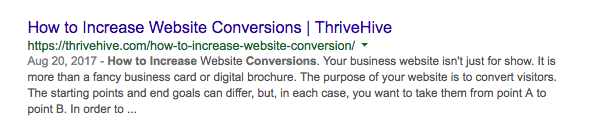

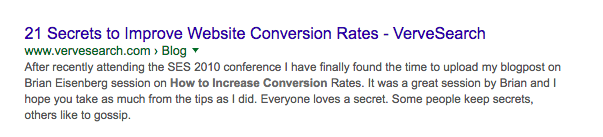

Like all search engines, Pricesearcher has ranking algorithms, and there are certain steps that retailers can take to optimize for Pricesearcher, and give themselves the best chance of a high ranking.

With that in mind, how does ‘Pricesearcher SEO’ work?

How to rank on Pricesearcher

At this stage in its development, Pricesearcher wants to remove the mystery around how retailers can rank well on its search engine. Pricesearcher’s Retail Webmaster and Head of Search, Paul Lovell, is currently focused on developing ranking factors for Pricesearcher, and conceptualizing an ideal product feed.

The team are also working with select SEO agencies to educate them on what a good product feed looks like, and educating retailers about how they can improve their product listings to aid their Pricesearcher ranking.

Retailers can choose to either go down the route of optimizing their product feed for Pricesearcher and submitting that, or optimizing their website for the crawler. In the latter case, only a website’s product pages are of interest to Pricesearcher, so optimizing for Pricesearcher translates into optimizing product pages to make sure all of the important information is present.

At the most basic level, retailers need to have the following fields in order to rank on Pricesearcher: A brand, a detailed product title, and a product description. Category-level information (e.g. garden furniture) also needs to be present – Pricesearcher’s data from its initial 500 million products found that category-level information was not provided in 7.9% of cases.

If retailers submit location data as well, Pricesearcher can list results that are local to the user. Additional fields that can help retailers rank are product quantity, delivery charges, and time to deliver – in short, the more data, the better.

A lot of ‘regular’ search engine optimization tactics also work for Pricesearcher – for example, implementing schema.org markup is very beneficial in communicating to the crawler which fields are relevant to it.

It’s not only retailers who can rank on Pricesearcher; retail-relevant webpages like reviews and buying guides are also featured on the search engine. Pricesearcher’s goal is to provide people with as much information as possible to make a purchase decision, but that decision doesn’t need to be made on Pricesearcher – ultimately, converting a customer is seen as the retailer’s job.

Given Pricesearcher’s role as a facilitator of online purchases, an affiliate model where the search engine earns a commission for every customer it refers who ends up converting seems like a natural way to make money. Smaller search engines like DuckDuckGo have similar models in place to drive revenue.

However, Dean is adamant that this would undermine the neutrality of Pricesearcher, as there would then be an incentive for the search engine to promote results from retailers who had an affiliate model in place.

Instead, Pricesearcher is working on building a PPC model for launch in 2019. The search engine is planning to offer intent-based PPC to retailers, which would allow them to opt in to find out about returning customers, and serve an offer to customers who return and show interest in a product.

Other than PPC, what else is on the Pricesearcher roadmap for the next few years? In a word: lots.

The future of search is vertical

The first phase of Pricesearcher’s journey was all about data acquisition – partnering with retailers, indexing product feeds, and crawling websites. Now, the team are shifting their focus to data science, applying AI and machine learning to Pricesearcher’s vast dataset.

Head of Search Paul Lovell is an analytics expert, and the team are recruiting additional data scientists to work on Pricesearcher, creating training data that will teach machine learning algorithms how to process the dataset.

“It’s easy to deploy AI too soon,” says Dean, “but you need to make sure you develop a strong baseline first, so that’s what we’re doing.”

Pricesearcher will be out of beta by December of this year, by which time the team intend to have all of the prices in the UK (yes, all of them!) listed in Pricesearcher’s index. After the search engine is fully launched, the team will be able to learn from user search volume and use that to refine the search engine.

The Pricesearcher rocket ship – founder Samuel Dean built this by hand to represent the Pricesearcher mission. It references a comment made by Eric Shmidt to Sheryl Sandberg when she interviewed at Google. When she told him that the role didn’t meet any of her criteria and asked why should she work there, he replied: “If you’re offered a seat on a rocket ship, don’t ask what seat. Just get on.”

At the moment, Pricesearcher is still a well-kept secret, although retailers are letting people know that they’re listed on Pricesearcher, and the search engine receives around 1 million organic searches on a monthly basis, with an average of 4.5 searches carried out per user.

Voice and visual search are both on the Pricesearcher roadmap; voice is likely to arrive first, as a lot of APIs for voice search are already in place that allow search engines to provide their data to the likes of Alexa, Siri and Cortana. However, Pricesearcher are also keen to hop on the visual search bandwagon as Google Lens and Pinterest Lens gain traction.

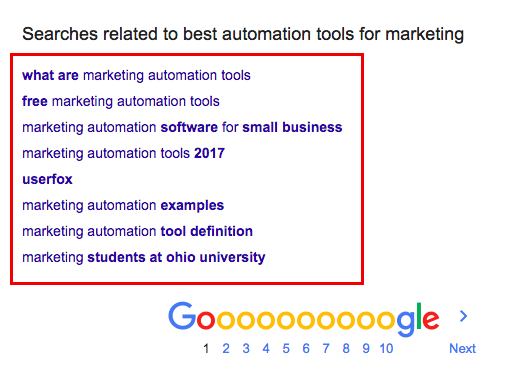

Going forward, Dean is extremely confident about the game-changing potential of Pricesearcher, and moreover, believes that the future of the industry lies in vertical search. He points out that in December 2016, Google’s parent company Alphabet specifically identified vertical search as one of the biggest threats to Google.

“We already carry out ‘specialist searches’ in our offline world, by talking to people who are experts in their particular field,” says Dean.

“We should live in a world of vertical search – and I think we’ll see many more specialist search engines in the future.”

source

https://searchenginewatch.com/2018/02/23/pricesearcher-the-biggest-search-engine-youve-never-heard-of/