For SEO agencies and independent consultants looking for new business, a two-step strategy might be all you need to demonstrate your efficacy and separate from competitors. First, identify prospective clients that are well-suited to your offering. Second, send them a pitch that unequivocally communicates the potential results they can achieve from your services. The key to both is data and, conveniently, the same data that you use to recognize great potential clients in the first step can also be used to make a powerful case as to what your services can deliver for them.

Identifying your ideal SEO clients

The businesses you probably want to approach are those that are tuned in to how SEO works and have already invested in it, but that still have a veritable need for your services in order to achieve their full SEO potential. Naturally, a brand that has already climbed to the top of the most relevant search engine results pages (SERPs) isn’t a great candidate because they simply don’t need the help. Nor is a business with no SEO experience and no real SERP presence; they might require a particularly hefty effort to be brought up to speed, and perhaps won’t be as likely to invest in – and commit to – an ongoing SEO engagement.

While investigating potential business opportunities within this desired Goldilocks Zone of current SEO success, you might also be looking to target companies in the industries that your agency has previously done well in – both to leverage those past successes and demonstrate relevance to prospective clients with an adjacent audience. This makes it easy for prospects to see themselves in the shoes of those clients you’ve already helped, and for you to apply and repeat your tried-and-tested techniques.

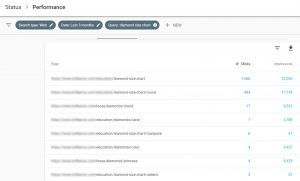

Putting this advice together, you can begin the client search process by looking at keywords important to industries you’re familiar with. You will probably want to explore companies within the mid-range SERPs (ranks 10–30) that could contend for the top spots if they had better professional assistance. You can then perform an analysis of these sites to determine their potential for SEO improvement. For example, a potential client that derives a great deal of its traffic from a keyword in which it still has room to grow and move up in the SERPs is ideal.

Use data to make your pitches irrefutable

While a slick email pitch can go a long way toward winning over new clients, it’s hard to beat the power of data-driven evidence (of course, your pitch can succeed on both style and substance). Be sure to use specific insights about the client’s SEO performance in your pitch – adding visuals will help make your case more clear and digestible. Also, consider using an SEO case study focused on your success in an overlapping space to offer an example of the results they could expect. Your pitch should culminate with a call-to-action for the potential client to get in touch to go deeper into the data and discuss how to proceed.

There are a number of approaches to framing your pitch. Here are four examples of different appeals you can use:

- Show how you can increase the client’s share of voice for a high value keyword

- Tell the business about keywords they’re missing out on

- Show where the client could (and should) be building backlinks

- Explain technical SEO issues the client has and how to fix them.

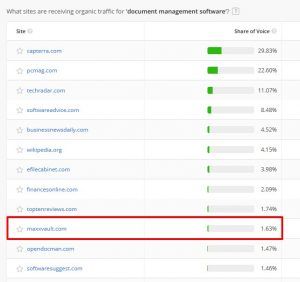

Show how you can increase the client’s share of voice for a high value keyword

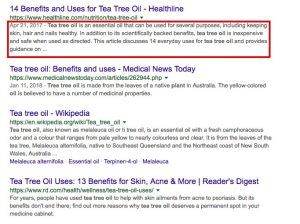

In SERPs, holding a top ranking is often exponentially superior. For example, it’s not unusual to see the top result capture 30% of all traffic (or ‘share of voice’) for a given keyword, while the tenth result receives a mere 1%.

Craft a pitch that pairs a result like this with data on how much traffic the keyword actually delivers to the company’s site, and the vast potential to multiply that traffic by improving their share of voice for that keyword becomes clear.

Tell the business about keywords they’re missing out on

Where a client has gaps in their keyword strategy, demonstrating your ability to fill them and deliver traffic the prospect didn’t realize they could be earning goes a long way towards proving your agency’s expertise and value. These keywords can be found by examining sites that share an audience overlap with the potential client, and then studying the keywords they rank highly on. This investigation may yield unexpected results, reinforcing that your agency can drive results in areas the client never would have thought of without your expertise.

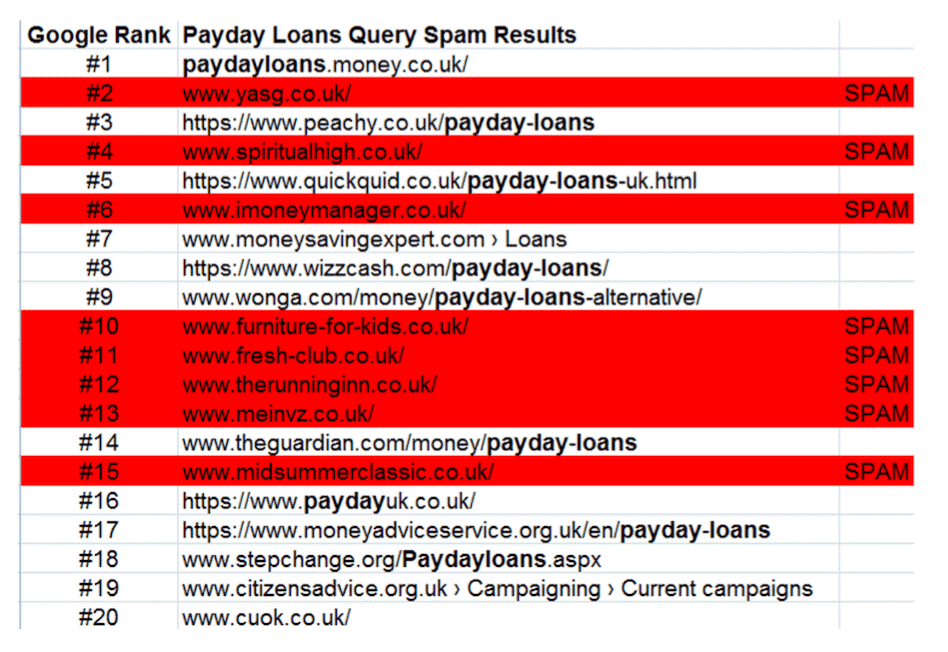

Show where the client could (and should) be building backlinks

Discover gaps where a potential client’s competitors are outmaneuvering and outperforming them when it comes to establishing links from other sites – and then report these within the framework of a strategy that will close these gaps at every level. If a client clearly isn’t pursuing this strategy (i.e. if the average competitor has multiple times their backlinks), be sure to communicate the value of competing on this front.

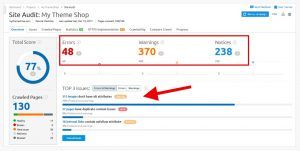

Explain technical SEO issues the client has and how to fix them

To use this approach, perform an audit of the potential client’s site to identify opportunities to improve its SEO practices. This is especially important if your firm specializes in these services.

Providing specific tips and guidance serves as a substantial upfront gesture, and clearly demonstrates the value of an ongoing relationship.

Conclusions

Conveying the specific remedies and additions that you would pursue to optimize a client’s site and search strategy serves as a strong introduction and major first step toward becoming indispensable to the client as the one hired to execute a winning SEO game plan. Be sure to personalize your pitches as much as possible to stand out from competitors, while ensuring that compelling data makes up the crux of your appeal and the proof that you’re the firm to help that business achieve its full SEO potential.

Kim Kosaka is the Director of Marketing at Alexa.com, which provides insights that agencies can use to help clients win their audience and accelerate growth.

source https://searchenginewatch.com/2018/05/31/what-data-do-you-need-to-find-pitch-and-win-new-seo-clients/