Google makes changes to its ranking algorithm almost every day. Sometimes we know about them, sometimes we don’t. Some of them remain unnoticed, others turn the SERPs upside down. So, this cheat sheet contains the most important algorithm updates of the recent years alongside battle-proven advice on how to optimize for these updates.

Panda

It all started changing in 2011 when Google introduced its first ever Panda algorithm update, the purpose of which was to improve the quality of search results by down-ranking low quality content. This is how Panda marked the beginning of Google’s war against grey-hat SEO. For 5 long years it had been a separate part of a wider search algorithm until 2016 when Panda became part of Google’s core algorithm. As stated by Google, this was done because the search engine doesn’t expect to make major changes to it anymore.

Main Focus

- duplicate content

- keyword stuffing

- thin content

- user-generated spam

- irrelevant content

Best Practice

The very first thing to focus your attention on is internally duplicated content. I can recommend carrying out site audits on a regular basis in order to make sure there are no duplication issues found on your site.

External duplication is yet another Panda trigger. So, it’s a good idea to check suspected pages with Copyscape. There are, however, some industries (like online stores with numerous product pages) that simply cannot have 100% unique content. If that’s the case, try to publish more original content and make your product descriptions as outstanding as you can. Another good solution would be letting your customers do the talking by utilizing testimonials, product reviews, comments, etc.

The next thing to do is to look for pages with thin content and fill them with some new, original, and helpful information.

Auditing your site for keyword stuffing is also an obligatory activity to keep Panda off your site. So, go through your keywords in titles, meta description tags, body, and H1 for making sure you’re not overusing keywords in any of these page elements.

Penguin

Penguin update launched in 2012 was Google’s second step towards fighting spam. In a nutshell, the main purpose of this algorithm was (and still is) to down-rank sites whose links it deems manipulative. Just like Panda, Penguin has also become part of Google’s core algorithm since 2016. So, it now works in real-time constantly taking a look at your backlink profile to determine if there’s any link spam.

Main Focus

- Links from spammy sites

- Links from topically irrelevant sites

- Paid links

- Links with overly optimized anchor text

Best Practice

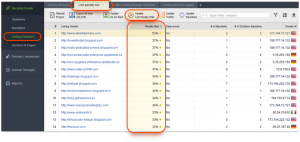

The very first thing to do here is to identify harmful links. It’s worth saying that various software use different methods and formulas to determine the harmfulness of a certain link. Luckily, SEO SpyGlass’s Penalty Risk metric uses the same formula as Penguin does.

After you’ve spotted the spammers, try to request removal of the spammy links in your profile by contacting the webmasters of the sites that link to you. But if you’re dealing with tons of harmful links or if you don’t hear back from the webmasters, the only option to go for is to disavow the links using Google’s Disavow tool.

Another thing that I can highly recommend making your habit is monitoring link profile growth. The thing is any unusual spikes in your link profile can be a flagger that someone has spammed on your site. Most probably you won’t be penalized for one or two spammy links, but a sudden influx of toxic backlinks can get you in trouble. And on the whole, it’s of the greatest advantage to check all the newly acquired links.

EMD

The Exact Match Domain update was introduced by Google in 2012 and is exactly what it’s called. The intent behind this update was to target exact match domains that were also poor quality sites with thin content. This was done because back in the days SEOs would skyrocket in search results by buying domains with exact match keyword phrases and building sites with extremely thin content.

Main Focus

- Exact match domains with thin content

Best Practice

There’s nothing wrong with using an exact match domain. The only condition for you to be on the safe side is to have quality content on your website. What is more, I wouldn’t advise you to remove low quality pages entirely, try to improve your already existing ones with new, original content instead.

It’s also a good idea to run link profile audits on a regular basis to identify spammy inbound links that have low trust signals and sort them out. After that, it’s only right and logical to start building quality links as they are still the major trust and authority signals.

Pirate

You probably still remember the days when sites with pirated content were ranking high in the search results and piracy used to be all over the Internet. Of course, that had to be stopped, and Google reacted with the Pirate Update which was rolled out in 2012 and aimed to penalize websites with a large amount of copyright violations. Please note that there’s no way the update can entail your website being removed from index, it can only penalize it with lower rankings.

Main Focus

- Copyright violations

Best Practice

There’s not much to advise here. The best thing you can do is publishing original content and not distributing others’ content without the copyright owner’s permission.

As you may know, the war with piracy is still not won. So, if you’ve noticed that your competitors use pirated content, it’s only fair to help Google and submit an official request using the Removing Content From Google tool. After that, your request will be handled by Google’s legal team, who can make some manual adjustments to indexed content or sites.

Hummingbird/RankBrain

Starting from 2013, Google has set a course for better understanding of search intent. So, it introduced Hummingbird in the same year and then RankBrain in 2015. These two updates complement one another quite well as they both serve for interpreting search intent behind a certain query. However, Hummingbird and RankBrain do differ a bit.

Hummingbird is Google’s major algorithm update that deals with understanding search queries (especially long, conversational phrases rather than individual keywords) and providing search results that match search intent.

And RankBrain is a machine learning system that is an addition to Hummingbird. Based on historical data on previous user behavior, it helps Google process and answer unfamiliar, unique, and original queries.

Main Focus

- Long-tail keywords

- Unfamiliar search queries

- Natural language

- User experience

Best Practice

It’s a good idea to expand your keyword research paying special attention to related searches and synonyms to diversify your content. Like it or not, but the days when you could solely rely on short-tail terms from Google AdWords are gone.

What is more, with search engines’ growing ability to process natural language, unnatural phrasing, especially in titles and meta descriptions can become a problem.

You can also optimize your content for relevance and comprehensiveness with the help of competitive analysis. There are a lot of tools out there that provide TF-IDF analysis. It can help a lot with discovering relevant terms and concepts that are used by a large number of your top-ranking competitors.

Besides all the above mentioned factors, don’t forget that it’s crucial to work on improving user experience. It’s a win-win activity as you’ll provide your users with better experience and won’t be down-ranked in SERPs. Keep an eye on your pages’ user experience metrics in Google Analytics, especially Bounce Rate and Session Duration.

Pigeon/Possum

Both Pigeon and Possum are targeting local SEO and were made to improve the quality of local search results. The Pigeon Update rolled out in 2014 was designed to tie Google’s local search algorithm closer to the main one. What’s more, location and distance started to be taken into consideration while ranking the search results. This gave a significant ranking boost to local directory sites as well as created much closer connection of Google Web search and Google Map search.

Two years later, when the Possum Update was launched, Google started to return more varied search results depending on the physical location of the searcher. Basically, the closer you are to a business’s address, the more chances you have to see it among local results. Even a tiny difference in the phrasing of the query now produces different results. It’s worth mentioning that Possum also somehow boosted businesses located outside the physical city area.

Main Focus

- Showing more authoritative and well-optimized websites in local search results

- Showing search results that are closer to a searcher’s physical location

Best Practice

Knowing that factors applicable to traditional SEO started to be more important for local SEO, local businesses owners now need to focus their efforts on on-page optimization.

In order to be included in Google’s local index, make sure to create a Google My Business page for your local business. What is more, keep an eye on your NAP as it needs to be consistent across all your local listings.

Getting featured in relevant local directories is of the greatest importance as well. It’s worth mentioning the Pigeon update resulted in a significant boost of local directories. So, while it’s always hard to rank in the top results, it’s going to be much easier for you to get included in the business directories that will likely rank high.

Now that the location from where you’re checking your rankings influences a lot the results you receive, it’s a good idea to carry out geo-specific rank tracking. You just need to set up a custom location to check positions from.

Fred

Google Fred is an unofficial name of another Google update which down-ranked websites with overly aggressive monetization. The algorithm hunts for excessive ads, low-value content, and websites that offer very little user benefit. Websites that have no other purpose than to drive revenue rather than providing helpful information are penalized the hardest.

Main Focus

- Aggressive monetization

- Misleading or deceptive ads

- User experience barriers and issues

- Poor mobile compatibility

- Thin content

Best practice

It’s totally fine to put ads on your website, just consider scaling back their quantity and consider their placement if they prevent users from reading your content. It would be also nice to go through Google Search Quality Rater Guidelines to self-evaluate your website.

Just as usual, go on a hunt for pages with thin content and fix them. And of course, continue working towards improving user experience.

Mobile Friendly Update/Mobile-first indexing

Google’s Mobile Friendly Update (2015), also known as Mobilegeddon, was designed to ensure that pages optimized for mobile devices rank higher in mobile search and down-rank not mobile friendly webpages. However, soon it has become not enough just to up-or down-rank sites according to their mobile friendliness. So, this year Google introduced mobile-first indexing according to which it started to index pages with the smartphone agent in the first place. Moreover, websites that only have desktop versions have been indexed as well.

Main Focus

- All sites (both mobile-friendly and not)

Best Practice

If you’re curious whether your site has been migrated to mobile-first indexing or not, check out your Search Console. If you didn’t receive a notification, it means that your website is not included in mobile-first index yet. What is more, make sure that your robots.txt file doesn’t restrict Google bot from crawling your pages.

If you still haven’t adapted your website for mobile devices, the time to join the race is now. There are a few mobile website configurations for you to pick from, but Google’s recommendation is responsive design. If your website is already adapted for mobile devices, run the mobile friendly test to see if it meets Google’s objectives.

If you have a dynamic serving or separate URLs make sure that your mobile site contains the same content as your desktop site. What is more, structured data as well as metadata should be present on both versions of your site.

For more detailed information, consider other recommendations on mobile-first indexing from SMX Munich 2018.

Page Speed Update

And now on to the Page Speed Update that rolled out in July of this year which has finally made page speed a ranking factor for mobile devices. According to this update, faster websites are supposed to rank higher in search results. In light of this, our team conducted an experiment to track correlation between page speed and pages’ positions in mobile SERPs after the update.

It turned out that a page’s Optimization Score had a strong correlation to its position in Google search results. And what is more important, slow sites with high Optimization score were not hit by the update. That brings us to a conclusion that Optimization is exactly what needs to be improved and worked on in the first place.

Main Focus

- Slow sites with low Optimization score

Best Practice:

There are now 9 factors that do influence Optimization Score officially stated by Google. So, after you’ve analyzed your mobile website’s speed and spotted (hopefully not) its weak places, consider these 9 rules for Optimization Score improvement.

- Try to avoid landing page redirects

- Reduce your file’s size by enabling compression

- Improve the response time of your server

- Implement a caching policy

- Minify resources (HTML, CSS, JavaScript)

- Optimize images

- Optimize CSS delivery

- Give preference to visible content

- Remove render-blocking JavaScript

source https://searchenginewatch.com/cheat-sheet-google-algorithm-updates-2011-2018

No comments:

Post a Comment